docGen - Model Training for Automatic Documentation

docGen: Model Training for Automatic Documentation

In this blog post, we’ll embark on a detailed exploration of the model training process behind DocGenerator, our revolutionary tool for automating documentation generation. This journey takes us through the quintessential stages of a data science project, from data preparation to model evaluation.

Data Preparation

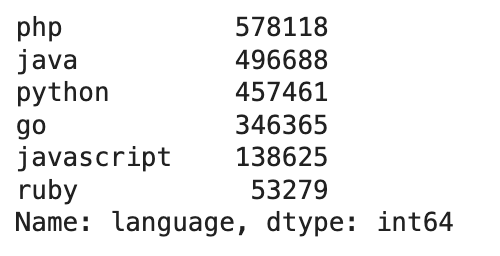

The first step in any data science project is preparing the data. For DocGenerator, we rely on the extensive and diverse CodeSearchNet

dataset, which includes millions of code snippets and their corresponding documentation comments across several programming languages, including Java. This dataset is pivotal for training our model to understand and generate accurate docstrings.

Cleaning and Tokenization

Our initial task is to cleanse the data, removing any irrelevant content such as non-documentation comments or excessive whitespace. This ensures the model learns from clean, relevant data. Next, we tokenize both the code snippets and the docstrings, converting the text into a format that our machine learning model can process. This tokenization step is akin to breaking down the data into understandable pieces for the model, laying the groundwork for effective learning.

Model Selection and Configuration

For DocGenerator, we’ve chosen the T5 (Text-to-Text Transfer Transformer) model, renowned for its flexibility and efficiency in handling text-based tasks. The T5 model serves as the backbone of our tool, allowing us to fine-tune it on the prepared dataset for generating documentation.

T5 Model and Tokenizer Initialization

We initialize the T5 model and its tokenizer with the following lines of code:

1 | tokenizer = T5Tokenizer.from_pretrained('t5-small', legacy=False) |

This setup enables our model to leverage the powerful pre-trained T5 architecture, adapting it to the specific task of generating docstrings from code snippets.

Training Process

The training process involves feeding the tokenized code snippets and docstrings into the model, allowing it to learn the mapping between code and its documentation. We carefully monitor the model’s performance, adjusting parameters such as the learning rate and batch size to optimize the training outcome.

Loss Function and Optimizer

Our training script specifies the loss function and optimizer, key components that guide the model’s learning process. These settings are crucial for ensuring the model effectively minimizes errors during training, gradually improving its ability to generate accurate and relevant docstrings.

Evaluation and Fine-tuning

After training, we evaluate the model’s performance using a separate test set, not seen by the model during training. This step is vital for assessing how well the model generalizes to new, unseen data. We use metrics such as BLEU score to quantitatively measure the quality of the generated docstrings, allowing us to fine-tune the model further if necessary.

Conclusion

The development of DocGenerator represents a significant step forward in automating the documentation process for developers. Through careful data preparation, strategic model selection, and meticulous training and evaluation, we’ve created a tool that not only understands the intricacies of programming languages but also generates comprehensive, accurate documentation, reducing the manual effort required and improving code maintainability.